Predicting Item Difficulty in a Reading Test

A Construct Identification Study of the Austrian 2009 Baseline English Reading Test

Summary

Excerpt

Table Of Contents

- Cover

- Title

- Copyright

- About the author

- About the book

- This eBook can be cited

- Acknowledgements

- Contents

- Abbreviations

- 1 Introduction

- 1.1 The Background to the Study

- 1.2 The Research Objectives

- 1.3 The Structure of the Publication

- 2 Political and Historical Context

- 2.1 The Development of Educational Standards in Austria

- 2.2 Developing Standard-Based Assessment Tasks

- 2.2.1 E8 Test Purpose

- 2.2.2 E8 Test Constructs

- 2.2.3 E8 Item Development

- 2.2.4 Reporting Results

- 2.3 The E8 Baseline Reading Test

- 2.3.1 Contextual and Situational Constraints

- 2.3.2 Construct Definition

- 2.3.3 Operationalisation

- 2.3.4 Interpreting and Reporting Test Results

- 3 Approaches to Language Test Validation

- 3.1 Content Validity

- 3.2 Criterion-Related Validity

- 3.3 Construct Validity

- 3.4 Face Validity

- 3.5 Unitary Concepts of Validity

- 3.6 Operational Frameworks

- 4 Item Content Analysis

- 4.1 Defining and Modelling Reading Comprehension

- 4.1.1 Bottom-Up Models

- 4.1.2 Top-Down Models

- 4.1.3 Interactive Models

- 4.1.4 Componential Models

- 4.1.5 A Cognitive Processing Model for Reading Comprehension

- 4.2 Potential Predictors of Item Difficulty

- 4.2.1 Contextual Features Affecting Reading Comprehension

- 4.2.2 Cognitive Reading Processes as Potential Predictors of Item Difficulty

- 4.3 Operationalisation of Predictor Variables

- 5 Statistical Analyses

- 5.1 Relationships Among Item Content Variables

- 5.2 Content Characteristics and Empirical Difficulty

- 5.2.1 Data

- 5.2.2 Methods

- 6 Results

- 6.1 Simple Regression Analyses

- 6.2 Analyses of Variance

- 6.3 Multiple Regression Models

- 7 Conclusion

- 7.1 Answers to the Research Questions

- 7.2 Limitations and Suggestions for Further Research

- Appendices

- Figures

- Tables

- References

- Series Index

1.1 The Background to the Study

In contrast to many other European countries, Austria has neither had a strong tradition in using standardised foreign language testing in classrooms, nor in using external or large-scale assessments which can provide authorities with data for monitoring and evaluating schools and the education system in terms of students’ foreign language ability. Rather, pupils have been, and still are, continuously assessed by their teachers using summative assessment methods such as written achievement tests and dictations, oral exams, and presentations. Additionally, various formative activities are carried out in order to observe classroom participation, for instance, vocabulary quizzes, various homework assignments and/or oral tasks. This traditional approach to assessing pupils is a rather norm-referenced one, whereby pupils’ performances are compared in a relative way to each other (Eurydice, 2009).

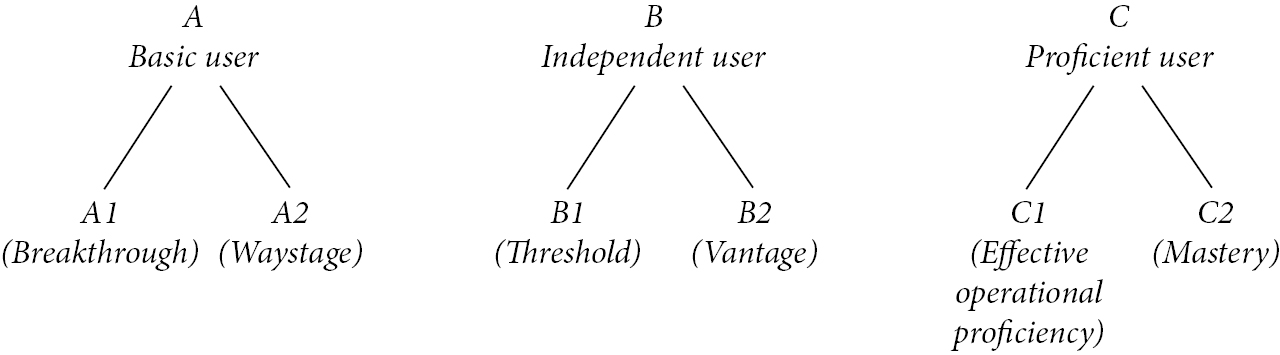

However, a perceived need for greater comparability of students’ performances in general and public doubts concerning the benefit and quality of foreign language education in particular called for a criterion-referenced approach to assessment to provide stakeholders such as students, their parents, or educational authorities, with transparent and explicit criteria to establish which language activities a learner should be able to carry out in English. Thus, a language proficiency framework is needed that illustrates such criteria. One of the most widely used and recognised frameworks internationally is the Council of Europe’s (2001) Common European Framework of Reference for Languages: Learning, Teaching, Assessment (CEFR), which describes “the levels of proficiency required by existing standards, tests and examinations in order to facilitate comparisons between different systems of qualifications” (p. 21). It organises language learning and learner achievement into six broad levels, as illustrated in Fig. 1. According to the authors, “an outline framework of six broad levels gives an adequate coverage of the learning space relevant to European language learners” (Council of Europe, 2001, p. 23).

Based on the CEFR, the following changes necessary for a criterion-referenced approach to assessment were introduced in the Austrian school system:

• the establishment of CEFR-level-based national curricula (levels A1 to B1 for lower secondary schools; levels B1 to B2 for higher secondary schools),

• the legal introduction of CEFR-level-based National Educational Standards for English at levels A2 and B1 (E8 descriptors), outlined in the Bildungsstandards ←13 | 14→Verordnung of the Austrian Ministry for Education (Bundeskanzleramt, 2009), and

• the introduction of comprehensive regular and objective tests of students’ English skills, as defined by the E8 descriptors, to drive the improvement of the quality of both classroom practice and the school system. (For more details on how the CEFR was used for the development of standard-based test items, see Section 2.2.)

Generally speaking, the Austrian E8 descriptors and the resulting standards-tests divide language proficiency into the following levels:

Details

- Pages

- 210

- Year

- 2020

- ISBN (PDF)

- 9783631818794

- ISBN (ePUB)

- 9783631818800

- ISBN (MOBI)

- 9783631818817

- ISBN (Hardcover)

- 9783631812495

- DOI

- 10.3726/b16819

- Language

- English

- Publication date

- 2020 (March)

- Keywords

- Test validation Reading strategies Predictor variables EFL reading Cognitive reading processes Teaching reading Item development Educational standards Test development

- Published

- Berlin, Bern, Bruxelles, New York, Oxford, Warszawa, Wien, 2020. 210 pp., 19 fig. b/w, 45 tables.